Nanowerk August 17, 2022

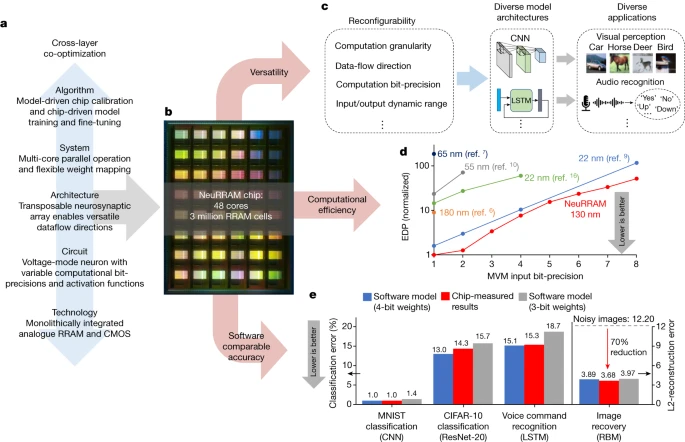

Compute-in-memory (CIM) based on resistive random-access memory (RRAM) meets the energy demand on edge devices by performing AI computation directly within RRAM. Although efficiency, versatility and accuracy are all indispensable for broad adoption of the technology, the inter-related trade-offs among them cannot be addressed by isolated improvements on any single abstraction level of the design. By co-optimizing across all hierarchies of the design from algorithms and architecture to circuits and devices, a team of researchers in the US (Stanford University, UC San Diego, University of Notre Dame, Pittsburg University) has developed NeuRRAM—a RRAM-based CIM chip that simultaneously delivers versatility in reconfiguring CIM cores for diverse model architectures, energy efficiency that is two-times better than previous state-of-the-art RRAM-CIM chips across various computational bit-precisions, and inference accuracy comparable to software models quantized to four-bit weights across various AI tasks, including accuracy of 99.0 percent on MNIST18 and 85.7 percent on CIFAR-1019 image classification, 84.7-percent accuracy on Google speech command recognition, and a 70-percent reduction in image-reconstruction error on a Bayesian image-recovery task…read more. Open Access TECHNICAL ARTICLE

Design methodology and main contributions of the NeuRRAM chip. Credit: Nature volume 608, pages504–512 (2022)