Phys.org June 18, 2024

Existing methods for single-view 3D reconstruction with Neural radiance fields (NeRF) rely on either data prior to hallucinate views of occluded regions which may not be physically accurate, or shadows observed by RGB cameras which are difficult to detect in ambient light and low albedo backgrounds. A team of researchers in the US (MIT, industry) proposed using time-of-flight data captured by a single-photon avalanche diode to overcome these limitations models two-bounce optical paths with NeRF using lidar transient data for supervision. By leveraging the advantages of both NeRF and two-bounce light measured by lidar they reconstructed visible and occluded geometry without data prior data or reliance on controlled ambient lighting or scene albedo. They showed improved generalization under practical constraints on sensor spatial- and temporal-resolution. According to the researchers their method is a promising direction as single-photon lidars become ubiquitous on consumer devices such as phones tablets and headsets… read more. Open Access TECHNICAL ARTICLE

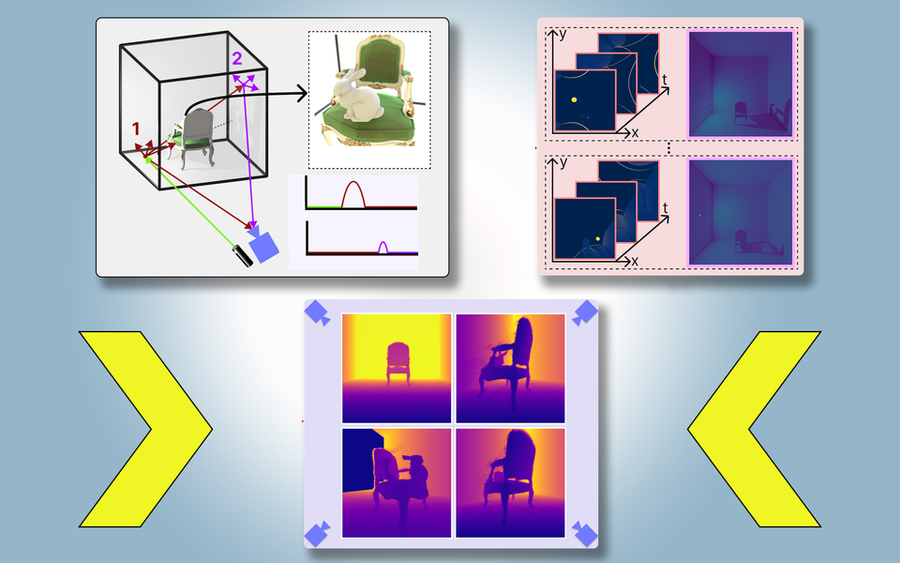

The system accurately models the rabbit in the chair, even though that rabbit is blocked from view… Credit: Courtesy of the researchers, edited by MIT News.